Tecton & Redis: High Performance at Scale for Real-Time Machine Learning

Tecton is announcing support for Redis to deliver single-digit ms latency to users running at scale, at 14x lower cost than DynamoDB

Organizations that use machine learning to power real-time, customer-facing interactions every day care deeply about the performance and cost of their feature store. Typical use cases include recommendations, search ranking, real-time pricing, and fraud detection. We’ve heard overwhelming demand from our high-scale customers to support the most performant and cost-effective infrastructure options for their feature store, which is why we’re delighted to announce that Tecton is launching support for two managed versions of Redis as an online store: Redis Enterprise Cloud and Amazon ElastiCache.

In this article, we’ll cover: (1) why feature stores need high-performance online stores, (2) a comparison between Redis and DynamoDB, including a technical benchmark showing that Redis can deliver 3x faster latencies at lower cost than DynamoDB, and (3) how to choose the online store that best fits your needs.

What does it mean for Tecton to integrate with Redis?

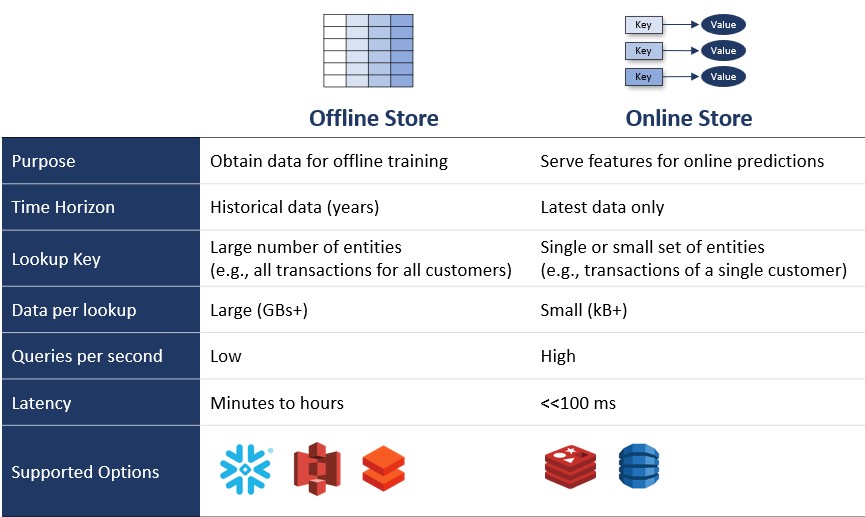

Let’s step back for a minute and briefly explain the relationship between a feature store and an online store. Feature stores must support the two main access patterns for ML: retrieving millions of rows of historical data for model training, and retrieving a single row, in a matter of milliseconds, to serve features to models running in production. Feature stores use data lakes or data warehouses as offline stores for training, and key-value stores as online stores for serving. If you want to learn more about how feature stores use an online store, read how Redis Enterprise Cloud and Tecton work together to enable real-time machine learning at scale.

Tecton is neither a compute engine nor a database. Instead, it sits on top of and orchestrates the compute and storage infrastructure that our customers already use. Tecton now supports two options for the online store, which means customers can choose between DynamoDB and Redis. This matters because, as we’ll see below, they each provide different advantages and may be a better fit depending on the use case.

Comparing Redis vs. DynamoDB on Tecton

Both Redis and DynamoDB are NoSQL databases that use a key-value format to provide low-latency retrieval, but there are some important differences.

Redis is an in-memory database where customers provision and pay for clusters. These clusters need to be provisioned for peak capacity, and won’t automatically scale beyond that. There is a fixed cost per hour regardless of the number of read and write requests.

DynamoDB comes in two flavors: on-demand capacity mode and provisioned capacity mode. With on-demand capacity, DynamoDB will elastically scale with serving load. Customers pay for individual read and write requests, with writes being 5x more expensive than reads. Provisioned capacity mode, on the other hand, works in a similar way to Redis: customers provision for the number of reads and writes at peak capacity, and pay a fixed cost per hour.

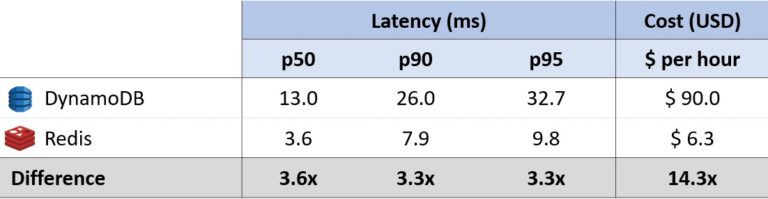

Tecton supports Redis and DynamoDB on-demand mode. To evaluate both options that our users have access to, we ran a simple benchmark comparing latency and cost.

Benchmark Setup

Our benchmark simulates a workload we see with our customers that run at high scale. An application running an ML model (e.g. a recommendation system) makes requests to Tecton and gets back a series of feature vectors that it can use for inference. We are making 20,000 requests per second, and each request is calling ten distinct feature vectors that the model uses to make a prediction.

The latency figures we report represent the end-to-end latency as seen by a client connecting to Tecton, which includes aggregations and filtering done by Tecton for Aggregate Feature Vectors. For additional details on test setup, see notes at the end of this article.

Benchmark Results

Results show that for our benchmark workload on Tecton, Redis is ~3x faster across median and tail latencies, producing single-digit ms latencies, and ~14x less expensive than DynamoDB.

The stark difference is a result of the high scale at which we’re running these benchmarks, and we would expect the gap to shrink at lower volumes. It’s also important to remember that this is a comparison between Redis and DynamoDB on-demand mode, which is the mode that Tecton supports. Using DynamoDB provisioned mode would yield lower costs than DynamoDB on-demand mode, but we recommend that customers looking for a provisioned experience use Redis because it will still be cheaper and more performant.

Note: We ran our benchmark with Redis Enterprise Cloud, although we found similar results with Amazon ElastiCache.

Which online store should you choose?

Whether you’re a Tecton customer or building your own feature store, the answer depends on your use case. The main factors to consider are:

- Team expertise: If your team is already familiar with Amazon ElastiCache or Redis Enterprise Cloud, it’s likely the right choice for cost and performance reasons. Redis is a popular database among developers and is easy to learn. But if your team doesn’t have working experience with Redis, DynamoDB is often a faster way to get started.

- Predictability: If your workloads are highly variable and have unpredictable traffic, DynamoDB will scale dynamically and you’ll only pay for the capacity you use, which may make it a better option. If you have predictable application traffic, Redis will be more cost effective.

- Read and write load (QPS): As your workloads become (1) higher scale and (2) more write-heavy, Redis becomes a superior solution. At very low QPS and read-heavy workloads, DynamoDB will be a better option.

- Median and tail latency requirements: If you have incredibly strict latency requirements, Redis will be a better choice. If ultra-fast latency is not a concern, DynamoDB will provide sufficient performance.

- Cost: If you are cost-sensitive and are running at scale, Redis is much more cost-effective than DynamoDB. The online store can account for up to 60% of the total cost of ownership of an ML platform, so the large price difference between Redis and DynamoDB leads to significant savings.

Time and resources permitting, a benchmark of your particular use case under realistic conditions is always the best way to determine the optimal solution for you.

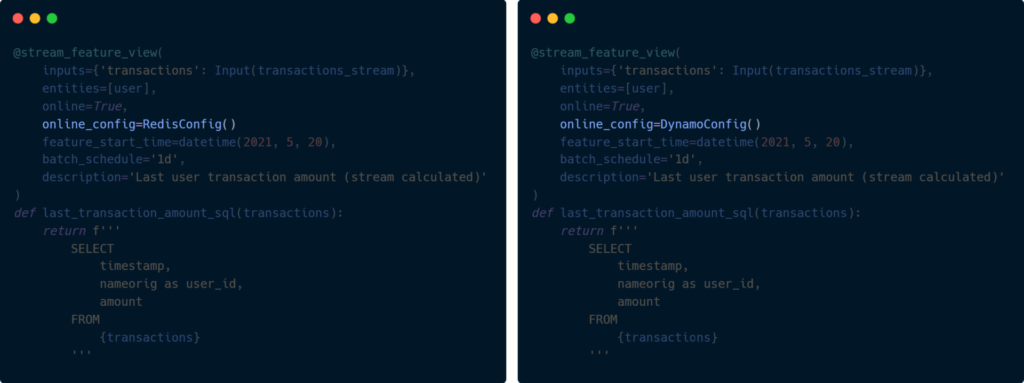

Freely choose the online store for each use case with Tecton

With the new integration, customers can choose which online store to use at an individual feature level through a single line of code. For high-volume, latency-sensitive use cases, you might decide to use Redis, while for use cases with unpredictable application traffic, you could easily use DynamoDB instead. This federated approach ensures you can maximize the benefits of each online store while abstracting away all the complexity of managing multiple online stores.

Try it now

Customers can now use Tecton with Redis. To learn more, see our How to Set up Redis as an Online Store documentation. If you’re not a customer and are interested in learning more, contact our team to request a free trial and get started:

Appendix: Benchmark Setup

Our benchmark latency and cost test was set up as follows:

Redis

We used Redis Enterprise Cloud to set up the Redis online store. With Redis Enterprise Cloud’s Flexible Plan we can provision databases by choosing the desired throughput and dataset size. While Redis Enterprise Cloud has an option to change the capacity on demand via an API call to only pay for used capacity, we ran the test with constant throughput and therefore did not use that option.

We provisioned the Redis Cluster at 400,000 QPS, which is 2x the peak throughput in the test (200,000 QPS). We chose 2x to maintain a fair comparison to DynamoDB; with DynamoDB on-demand mode, users can scale load to 2x the previous peak in a 30 min period without experiencing any throttling.

High availability was enabled on Redis. Tecton was connected to Redis via VPC Peering and used a private endpoint to read and write.

| Online Store | Shards | Provisioned Throughput | Dataset size |

|---|---|---|---|

| Redis Enterprise Cloud | 32 | 400,000 QPS | 5 GB |

DynamoDB

The DynamoDB setup is straightforward. We’re using On-Demand mode which uses eventually consistent reads and hence consumes 0.5 RCUs per query.