Enhancing LLM Chatbots: Guide to Personalization

Summary: Tecton introduced new GenAI capabilities (in private preview) in the 1.0 release of its SDK that makes it much easier to productionize RAG applications. This post shows how the SDK can enable AI teams to use the SDK to build hyper-personalized chatbots via prompt enrichment and automated knowledge assembly. Check out Tecton’s interactive demo to try out Tecton.

Creating personalized and context-aware chatbots is a significant generative AI challenge. While Large Language Models (LLMs) offer powerful natural language processing capabilities, they often struggle with providing accurate, up-to-date, and personalized responses. This blog post will explore how Tecton’s GenAI agentic approach can enhance LLM-powered chatbots, making them more intelligent, contextual, and valuable for users.

In this article, I walk through the process of building a restaurant recommendation chatbot. Think of it as a chatbot for Yelp or other such services. I start with a basic implementation and progressively improve its responses by adding more relevant context through tools it needs to know the business and the individual user.

I used Tecton’s new GenAI package to create prompts and knowledge tools that provide the business and user context through Tecton’s declarative framework. Tecton’s declarative framework is a high-level abstraction in python that you can use to build data pipelines, prompts, and knowledge tools. It enables ML and AI developers to easily create and deploy the data transformations that deliver personalized context for production applications that use ML inference and generative AI.

A Prompt Without Context

As a baseline of the chatbot functionality, I started with a system prompt that provides instructions on what to do but doesn’t contain any user context. The LLM is instructed to act as a concierge for restaurant recommendations, to provide and address and suggest menu items. Without any more information, the LLM relies solely on its base training to comply.

The following code snippet shows the definition of a prompt without context, the definition of the AgentService which interacts with the LLM to serve the prompt, and a simple chatbot function to test it. Throughout this article, the `support_chatbot` function is used to test incremental changes to the AgentService and iterate through different forms of context that it can provide.

@prompt()

def sys_prompt():

return f"""

You are a concierge service that recommends restaurants.

Always provide an address.

Always suggest menu items.

"""

service = AgentService(

name="restaurant_recommender",

prompts=[sys_prompt],

)

def support_chatbot(service, user_query, context=None):

client = AgentClient.from_local(service)

langchain_llm = ChatOpenAI(model="gpt-4-0613")

langchain_agent = client.make_agent(langchain_llm, system_prompt="sys_prompt")

with client.set_context(context):

response = langchain_agent.invoke({"input": user_query})

return response["output"]An example response from this basic chatbot looks like this:

response = support_chatbot(service, "recommend a restaurant for tonight")

print(response)Example output:

Certainly! I'd be happy to recommend a restaurant for tonight. Here's a suggestion:

Restaurant: The Savory Bistro

Address: 123 Main Street, Anytown, USA

The Savory Bistro is a charming restaurant known for its eclectic menu and cozy atmosphere. Here are some suggested menu items:

1. Pan-seared salmon with lemon butter sauce

2. Truffle mushroom risotto

3. Grilled ribeye steak with garlic mashed potatoes

4. Roasted vegetable quinoa bowl

They also have a great selection of wines to pair with your meal. I hope you enjoy your dining experience at The Savory Bistro!

As you can see, this response is generic and doesn’t take into account any user-specific information or location. The restaurant suggestion is completely fictional and may not be relevant to the user’s actual location or tastes.

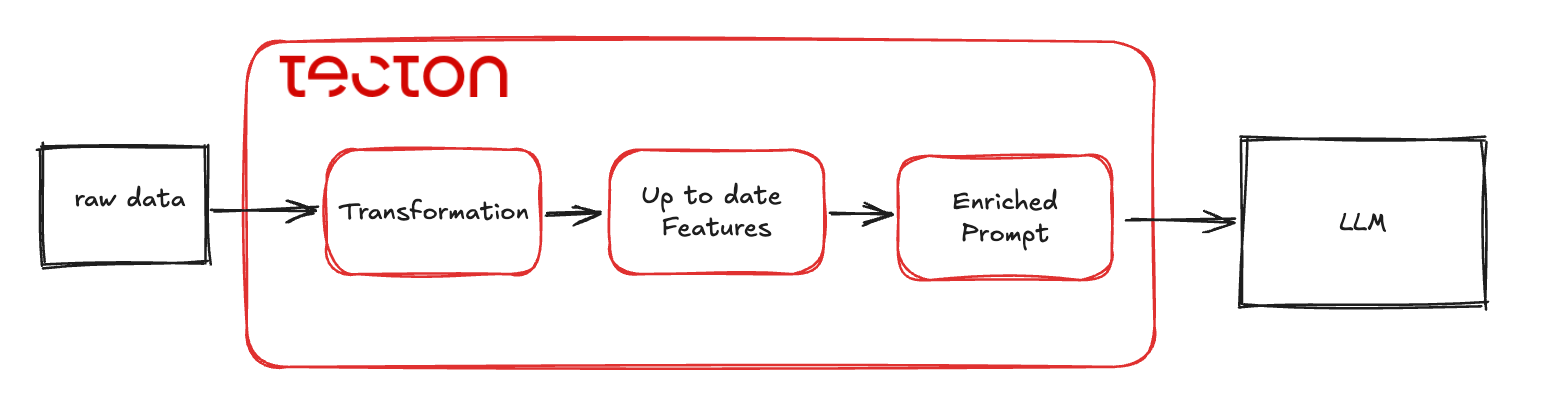

Prompt Enrichment

Enriched system prompts contain up to date features to provide better context for the LLM. In a user facing application this takes the form of user specific features incorporated into the system prompt.

I enhanced the chatbot by adding user-specific context to the system prompt. The following code creates a mock data pipeline using a Tecton feature view that provides a user’s name and their food preference given a `user_id`. It also creates a request time source that enables the prompt to incorporate run-time parameters. In this case, it is the user’s location which is clearly useful when recommending where to eat.

# Create a sample user info dataset

user_info = [

{"user_id": "user1", "name": "Jim", "food_preference": "American"},

{"user_id": "user2", "name": "John", "food_preference": "Italian"},

{"user_id": "user3", "name": "Jane", "food_preference": "Chinese"},

]

user_info_fv = make_local_batch_feature_view(

"user_info_fv",

user_info,

["user_id"],

description="User's basic information."

)

location_request = make_request_source(location = str)The system prompt has changed to incorporate location and the user’s name and it lists its dependency to the user_info_fv feature view. Adding this dependency creates metadata that traces data lineage between the prompt and the feature transformation pipeline(s). Data lineage is used in the Tecton platform to manage dependencies and provide governance.

@prompt(sources=[location_request, user_info_fv])

def sys_prompt(location_request, user_info_fv):

return f"""

Address the user by their name. Their name is {user_info_fv['name']}.

You are a concierge service that recommends restaurants.

Only suggest restaurants that are in or near {location_request['location']}.

Always provide an address.

Always suggest menu items.

"""

service = AgentService(

name="restaurant_recommender",

prompts=[sys_prompt],

)

In a mobile app the user_id and the location are available to provide the chatbot session parameters it needs in order to provide the personalization. The `mobile_app_context` dictionary in the following code provides these run-time parameters:

mobile_app_context = {"user_id": "user3", "location": "Charlotte, NC"}

response = support_chatbot(service, "recommend a restaurant for tonight", mobile_app_context)

print(response)Example output:

Hello Jane! I'd be happy to recommend a restaurant for you tonight in Charlotte, NC. Here's a great option:

Restaurant: The Southern Charm

Address: 456 Tryon Street, Charlotte, NC 28202

The Southern Charm is a delightful restaurant that offers a modern twist on classic Southern cuisine. Here are some menu items I suggest:

1. Crispy Fried Green Tomatoes with remoulade sauce

2. Shrimp and Grits with andouille sausage and creamy stone-ground grits

3. Bourbon-Glazed Pork Chop with sweet potato mash and collard greens

4. Carolina Trout with pecan brown butter and roasted vegetables

I hope you enjoy your dinner at The Southern Charm, Jane! Let me know if you need any other recommendations.

This response is now personalized with the user’s name and location. The restaurant suggestion is more relevant, being located in Charlotte, NC. However, it still doesn’t take into account Jane’s food preferences or dining history. In the next section you’ll see an example of providing tools to the LLM so it can request more information about the user if the user’s query requires more context.

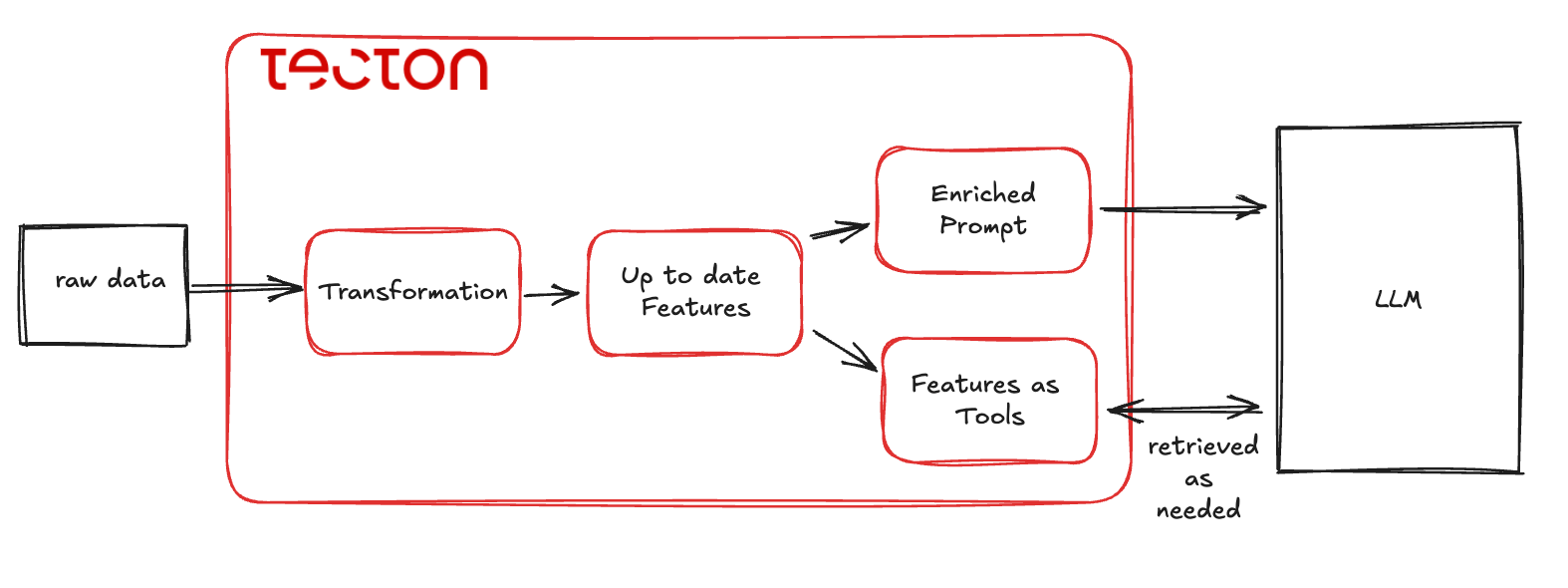

Adding Features as Tools

Most modern LLMs have added the concept of tools. These tools are functions that the LLM can call as needed to respond to specific user requests. Tools can be used to retrieve additional data or to take some action. As opposed to prompts where features provide context for the whole conversation, tools are only called when they are needed in order to respond to a user’s particular question. This agentic behavior improves the relevance of the responses because the prompt is not inundated with data that the LLM will not necessarily need. It also improves the efficiency of the whole solution by reducing the overall data retrievals executed to only those that are useful.

With Tecton, any feature pipeline can be used as an LLM tool. This brings real-time, streaming, batch data transformations to generative AI. Tecton’s low-latency scalable retrieval system delivers these personalized feature values as context to the LLM application increasing overall efficiency and efficacy of the solution. The feature view’s description informs the LLM about the kind of information it can retrieve when using it as a tool. This is how the LLM selects which tools are relevant for a given use request.

I enhanced the chatbot by adding features as tools to give it access to particular user-specific information on demand.

The following code snippet introduces another sample dataset that has the list of the last 3 restaurants the user has visited. The description of the feature view “User’s recent restaurant visits” informs the LLM about what kind of information it can retrieve from the tool. The LLM will decide to use it if the user’s request needs this knowledge about the user in order to respond.

The new AgentService, now incorporates the prompt and two feature views listed as its tools, giving the LLM access to the user’s food_preference by using user_info_fv and their recent restaurant visits by using recent_eats_fv.

# Add recent restaurant visits

recent_eats = [

{"user_id": "user1", "last_3_visits": str(["Mama Ricotta's", "The Capital Grille", "Firebirds Wood Fired Grill"])},

{"user_id": "user2", "last_3_visits": str(["Mama Ricotta's", "Villa Antonio", "Viva Chicken"])},

{"user_id": "user3", "last_3_visits": str(["Wan Fu", "Wan Fu Quality Chinese Cuisine", "Ru San's"])},

]

recent_eats_fv = make_local_batch_feature_view(

"recent_eats_fv",

recent_eats,

entity_keys=["user_id"],

description="User's recent restaurant visits."

)

service = AgentService(

name="restaurant_recommender",

prompts=[sys_prompt],

tools=[user_info_fv, recent_eats_fv]

)

The next chatbot test with the user query as “I need a different restaurant for tonight” requires information about which restaurants the user has already visited in order to provide an appropriate response. The LLM uses the recent_eats_fv tool for that purpose:

mobile_app_context = {"user_id": "user3", "location": "Charlotte, NC"}

response = support_chatbot(service, "I need a different restaurant for tonight", mobile_app_context)

print(response)Example output:

Hello Jane! I understand you're looking for a new restaurant to try tonight in Charlotte, NC. Based on your preference for Chinese cuisine and your recent dining history, I have a recommendation that you haven't visited recently:

Restaurant: Dragon Palace

Address: 789 Providence Road, Charlotte, NC 28207

Dragon Palace offers authentic Chinese cuisine with a modern twist. Here are some menu items I suggest:

1. Xiao Long Bao (Soup Dumplings) - a specialty you might not have tried at Wan Fu or Ru San's

2. Peking Duck - their signature dish, served with thin pancakes and hoisin sauce

3. Mapo Tofu - a spicy Sichuan dish that's different from the offerings at your recent visits

4. Chrysanthemum Fish - a delicate steamed fish dish with a light, floral flavor

This restaurant should provide a new experience for you, Jane, as it's different from your recent visits to Wan Fu, Wan Fu Quality Chinese Cuisine, and Ru San's. I hope you enjoy trying Dragon Palace tonight!

Now the chatbot considers Jane’s preference for Chinese cuisine and recommends a restaurant she hasn’t visited recently. With a little more data like the menu items that each user selected, it could also suggest menu items that might be different from what she’s had at her recent restaurant visits. The results become more relevant and useful for each user!

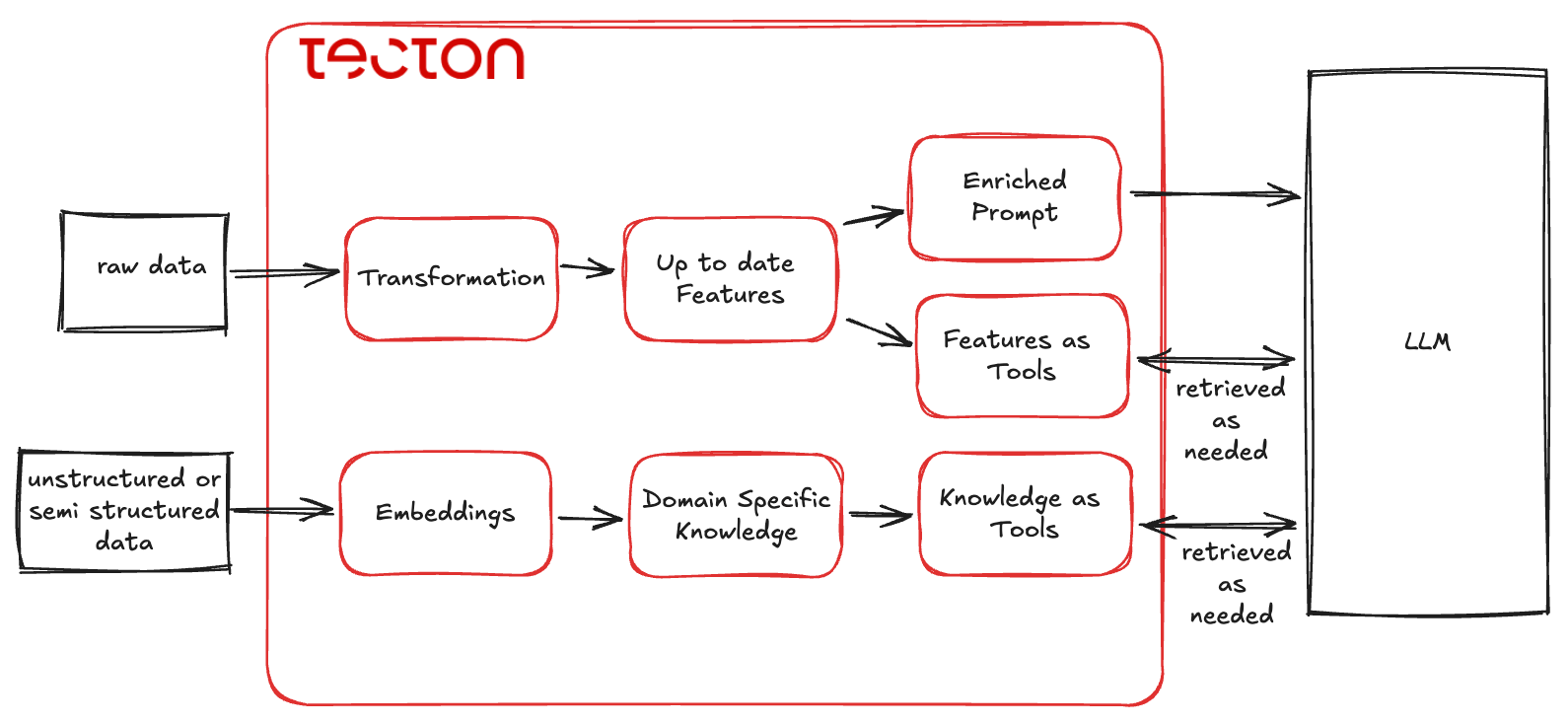

Adding Domain-Specific Knowledge

In many RAG (retrieval augmented generation) solutions, unstructured or semi-structured data is semantically indexed and loaded into a vector database to provide similarity search for items with similar meaning. It gives the LLM that ability to access the domain specific content that is most relevant to the user’s query. With knowledge as tools, just like with features as tools, the LLM only requests a search of a knowledge base when it determines that such knowledge is relevant to the user’s request.

I added domain-specific knowledge to the chatbot, allowing it to recommend only restaurants that have signed up for the service.

In the following code snippet, I adjusted the prompt instructing the LLM to “only select restaurants that have signed up for the service”. The description of the source of this knowledge tells the LLM that these are the restaurants that signed up for the service. The LLM will use this knowledge to do a similarity search of the user’s query because the description aligns with the system prompt instructions.

# Update the prompt to include the new instruction

@prompt(sources=[location_request, user_info_fv])

def sys_prompt(location_request, user_info_fv):

return f"""

Address the user by their name. Their name is {user_info_fv['name']}.

You are a concierge service that recommends restaurants.

Only suggest restaurants that are in or near {location_request['location']}.

Always provide an address.

Always suggest menu items.

Only select restaurants that have signed up for the service.

"""

# Create a sample knowledge base of restaurants

restaurants_signed_up = [

{

"restaurant_name": "Mama Ricotta's",

"zipcode": "28203",

"description": "A Charlotte staple since 1992, Mama Ricotta's offers authentic Italian cuisine in a warm, family-friendly atmosphere. Known for their hand-tossed pizzas, homemade pasta, and signature chicken parmesan."

},

# ... (the full code includes about 20 restaurants)

]

src = make_local_source(

"restaurants_signed_up",

restaurants_signed_up,

description="Restaurants that signed up for the service"

)

The next section of code indexes the knowledge and loads it into a vector database. It selects the “description” column for semantic indexing by specifying it as the vectorization_column. The user’s query will be semantically searched for similarity with the restaurant’s descriptions.

The code also updates the AgentService to serve the new prompt, the knowledge tool it can retrieve from and the feature tools we created before:

restaurant_knowledge = source_as_knowledge(

src,

vector_db_config=conf,

vectorize_column="description",

filter=[("zipcode", str, "the zip code for the restaurant")]

)

service = AgentService(

name="restaurant_recommender",

prompts=[sys_prompt],

knowledge=[restaurant_knowledge],

tools=[user_info_fv, recent_eats_fv]

)

This test of the chatbot makes use of the new restaurant knowledge by asking for “dry-aged steaks” which appears as the specialty in some restaurant descriptions:

mobile_app_context = {"user_id": "user1", "location": "28277"}

response = support_chatbot(service, "recommend a restaurant with dry-aged steaks", mobile_app_context)

print(response)Example output:

Hello Jim! I'd be happy to recommend a restaurant with dry-aged steaks in the 28277 area. Based on the restaurants that have signed up for our service, I have a perfect recommendation for you:

Restaurant: The Capital Grille

Address: 201 N. Tryon Street, Charlotte, NC 28202 (This is in uptown Charlotte, which is a short drive from the 28277 zip code area)

The Capital Grille is an upscale steakhouse chain that's known for its excellent dry-aged steaks. Here are some menu items I suggest:

1. Dry Aged Porterhouse Steak - their signature cut, aged for 24 days for enhanced flavor and tenderness

2. Bone-in Ribeye - another excellent dry-aged option with rich marbling

3. Sliced Filet Mignon with Cipollini Onions and Wild Mushrooms - if you prefer a leaner cut

4. Lobster Mac 'N' Cheese - a popular side dish that pairs well with steak

The Capital Grille also offers an extensive wine list to complement your meal. As an American steakhouse, it should align well with your preference for American cuisine.

I noticed this restaurant is on your list of recent visits, Jim. However, given your specific request for dry-aged steaks, I believe it's still the best option among our partnered restaurants. Their consistent quality and specialization in dry-aged steaks make it worth a repeat visit.

Enjoy your dinner at The Capital Grille, Jim!

This final version of the chatbot provides a recommendation that’s not only personalized to Jim’s preferences and location, but also ensures that the suggested restaurant is one that has partnered with our service. It considers Jim’s recent visits but explains why a repeat visit might be worthwhile given his specific request for dry-aged steaks.

Conclusion

Through this step-by-step process, we’ve seen how through Tecton’s GenAI package you can significantly improve the capabilities of an LLM-powered chatbot:

- I started with a basic prompt that provided generic recommendations.

- I added user context to the prompt, allowing for personalized greetings and location-based suggestions.

- I implemented features as tools, giving the chatbot access to user preferences and dining history.

- Finally, I added domain-specific knowledge, ensuring that recommendations are limited to restaurants that have partnered with our service.

Each of these enhancements contributes to a more intelligent, context-aware, and useful chatbot. The final version can provide highly personalized recommendations, consider the user’s recent dining experiences, and ensure that all suggestions align with the business’s offerings.

These incremental improvements transform a generic chatbot into a highly personalized restaurant recommendation chatbot. This shows how simple it is to iterate on LLM-powered applications using Tecton’s GenAI package. Tecton is known for its robust feature pipeline automation and infrastructure orchestration. While this article only uses sample datasets, Tecton’s ability to easily convert raw streaming, batch and real-time data into useful features enables companies to use their data assets for both machine learning and generative AI applications.

By leveraging Tecton’s GenAI package, developers can create sophisticated AI-driven applications that combine the power of LLMs with real-time, streaming or batch data, the user context, and domain-specific knowledge. This approach not only improves the user experience but also allows businesses to make better use of their data assets and partnerships.

As the field of generative AI continues to evolve, the Tecton Platform and its new GenAI package will play a crucial role in bridging the gap between powerful language models and the specific needs of businesses and their customers.

If you would like to try this out for yourself, check out the Tecton Tutorial here.