apply() Highlight: How to Use Fast & Fresh Features for Online Predictions

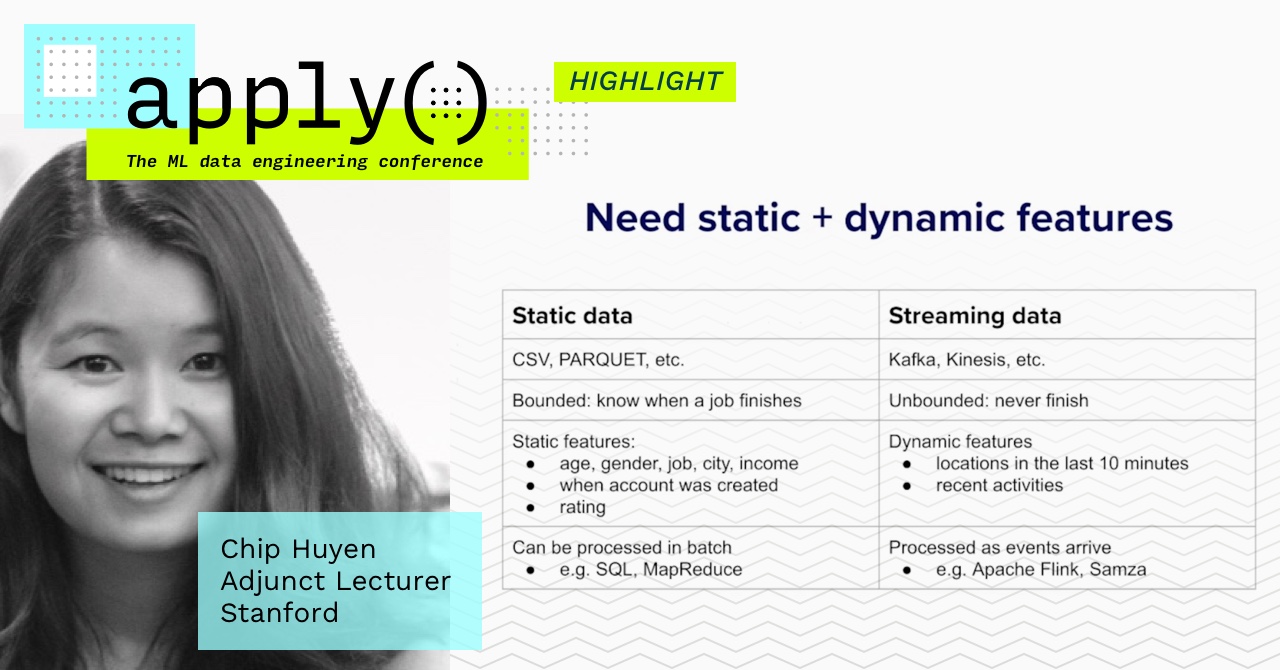

Online inference can significantly increase the impact of a machine learning model by incorporating fresh data. For example, if we’re building product recommendations for an e-commerce site, our model will benefit from information about what other products the user recently viewed. However, online inference requires careful engineering to ensure a snappy user experience.

At the apply() conference in April, Chip Huyen discussed what goes into keeping latency low during online inference, such as:

- Shortening prediction latency with smaller or faster models

- Unifying feature pipelines for offline training and online inference

Tecton’s Feature Store makes it easy for teams to serve fresh feature data for their online models.

Tecton enables Data Scientists to write a single feature definition, and seamlessly incorporate them in offline model training and online model inference. Once a feature has been added to the Feature Store, Tecton will continuously update the feature values in both the offline and online store, enabling a model to fetch the latest user data in just milliseconds.

Since models may rely on many sources of data, Tecton also simplifies the process of joining together batch, streaming, and request-time data. In Tecton, Feature Views allow data scientists to separately write features based on their data source, then define the set of features for their model in a Feature Service. Retrieving the latest feature values only requires passing in the user ID, and Tecton will return the entire feature vector for inference.

You can learn more about how Tecton accelerates the feature development process in our documentation, or request a free trial to get started today! To check out more talks from the apply() Conference in April or register for the apply() Meetup in August, click here.