5 Ways a Feature Platform Enables Responsible AI

With the dizzying speed of AI innovation, responsible AI has become a hot topic. How do businesses protect users—and their own bottom lines—when implementing new AI-powered products?

The Roadmap for Responsible AI defines principles and recommendations that can help companies navigate tough questions around AI safety. It was created by Business Roundtable, a large group of Fortune 500 CEOs representing every sector of the economy.

The roadmap is a great start to understanding responsible AI, but it stops short of saying how you should implement it. Let’s take a look at 5 responsible AI principles from the roadmap and how a feature platform enables them.

1. Mitigate the potential for unfair bias

Responsible AI requires accurate and unbiased data—and that’s where the challenge lies. It’s important to explain the relationships between AI systems’ inputs and outputs where possible and appropriate, particularly for systems that may result in high-consequence outcomes.

AI systems are only as good as the data they are trained on, and biased data can lead to biased results. Centrally managing features in a feature platform can help enforce best practices and surface machine learning (ML) models that are prone to bias. For instance, some data, like demographic data, needs to be sampled carefully—when certain categories have low membership in the original dataset, it’s difficult to retrieve a representative sample via random sampling. Furthermore, businesses may want to entirely avoid using sensitive features involving race, gender, or income.

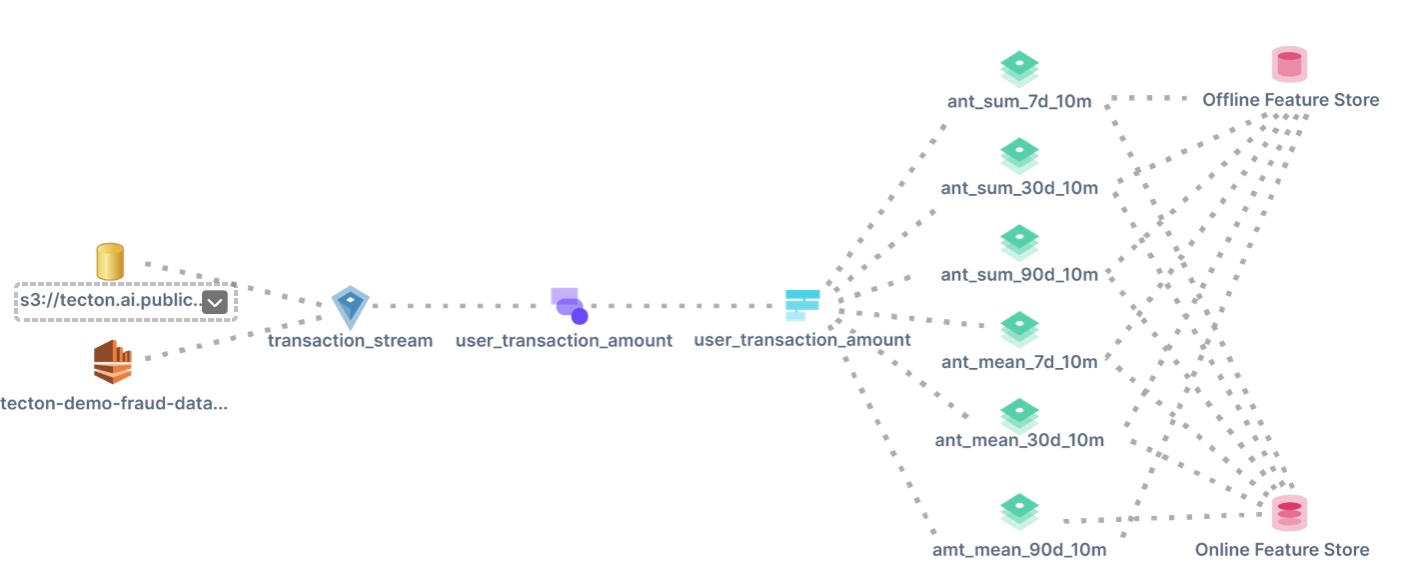

Once you have all of the correct features for your model, a common challenge that organizations typically face with homegrown feature stores or bespoke architectures is training-serving skew. Also known as online/offline skew, it means that the data you are training your model on is different from the data your model is using to make a prediction, thus unintentionally introducing biased results.

This challenge is amplified when leveraging a mix of batch, streaming, and real-time data sources in your ML models. Feature platforms help minimize bias by ensuring data is kept in sync between model training (offline feature store) and model serving (online feature store) across all your data sources.

2. Design for and implement transparency, explainability & interpretability

Solutions like Monitaur do a great job of providing visibility and explainability into the outputs of AI systems. Where feature platforms come in is enabling you to trust the inputs to your AI systems.

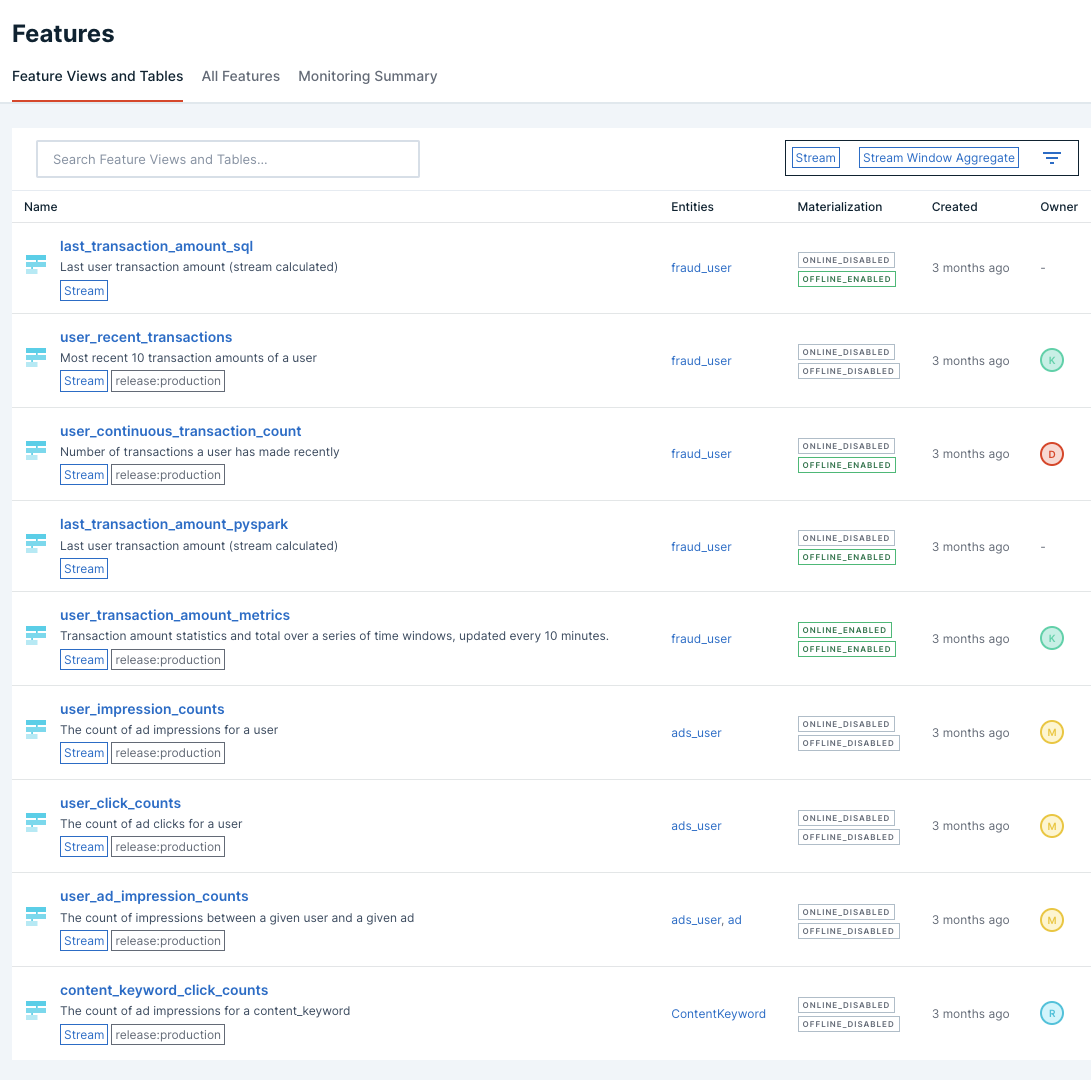

Ideally, the feature platform you use lets you define features as code and store them in a version-controlled central repository where they’re easily shared and accessed. They also abstract away the complexity of managing your batch, streaming, and real-time data transformations. These pipelines are the biggest source of errors, confusion, and misinterpretations when you go from designing a model in a data science notebook to implementing it in production.

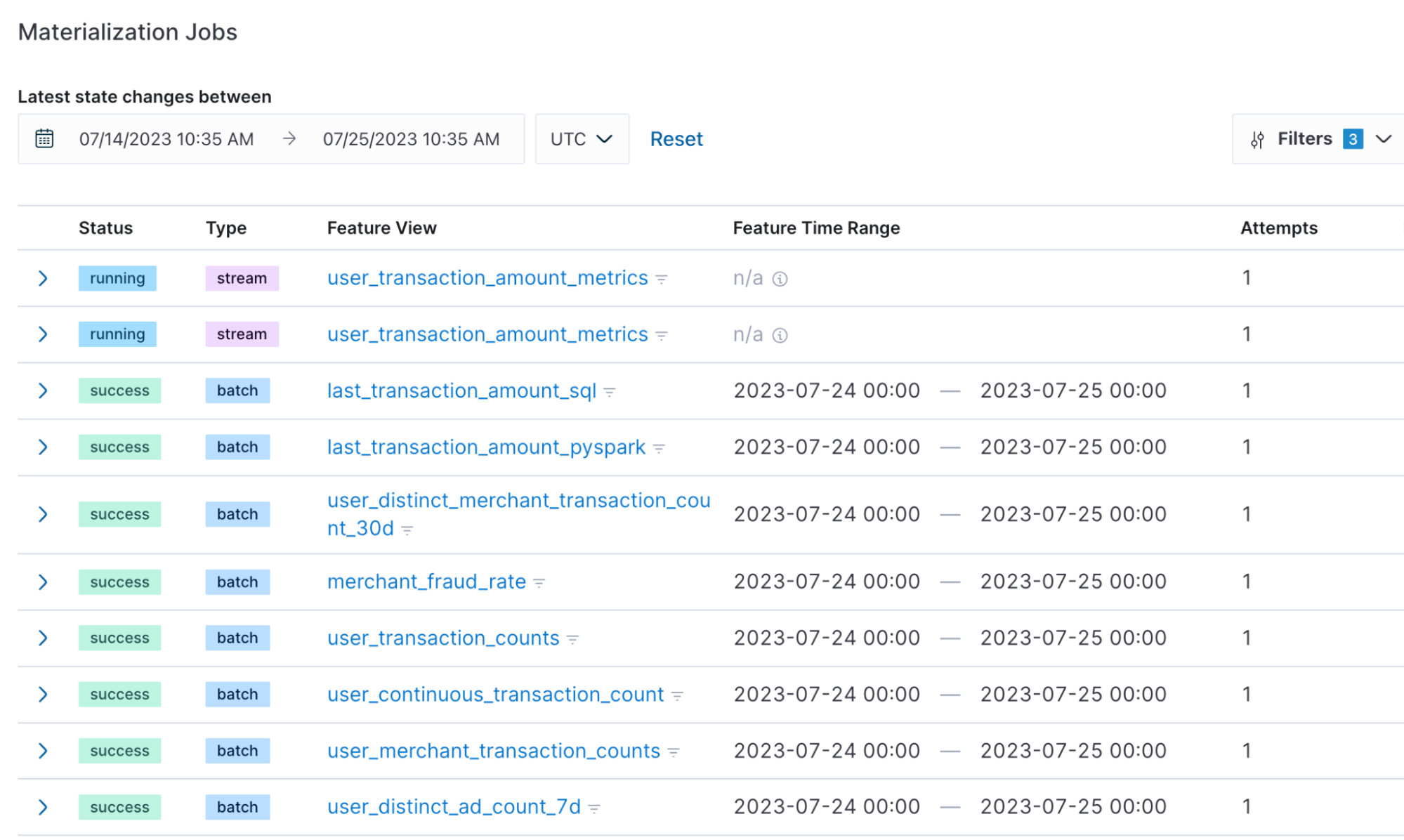

Here are some examples of the types of jobs a feature platform manages.

3. Evaluate and monitor model fitness and impact

It’s important for businesses to have clear goals when implementing AI systems—and doing so means defining and monitoring performance metrics, and ensuring there is a standard process for model testing, evaluation, and training.

Feature platforms are commonly adopted to help organizations improve speed to market for their production ML applications and unlock key real-time use cases such as fraud detection and product recommendations. These kinds of applications have millions of dollars in impact to companies’ bottom lines.

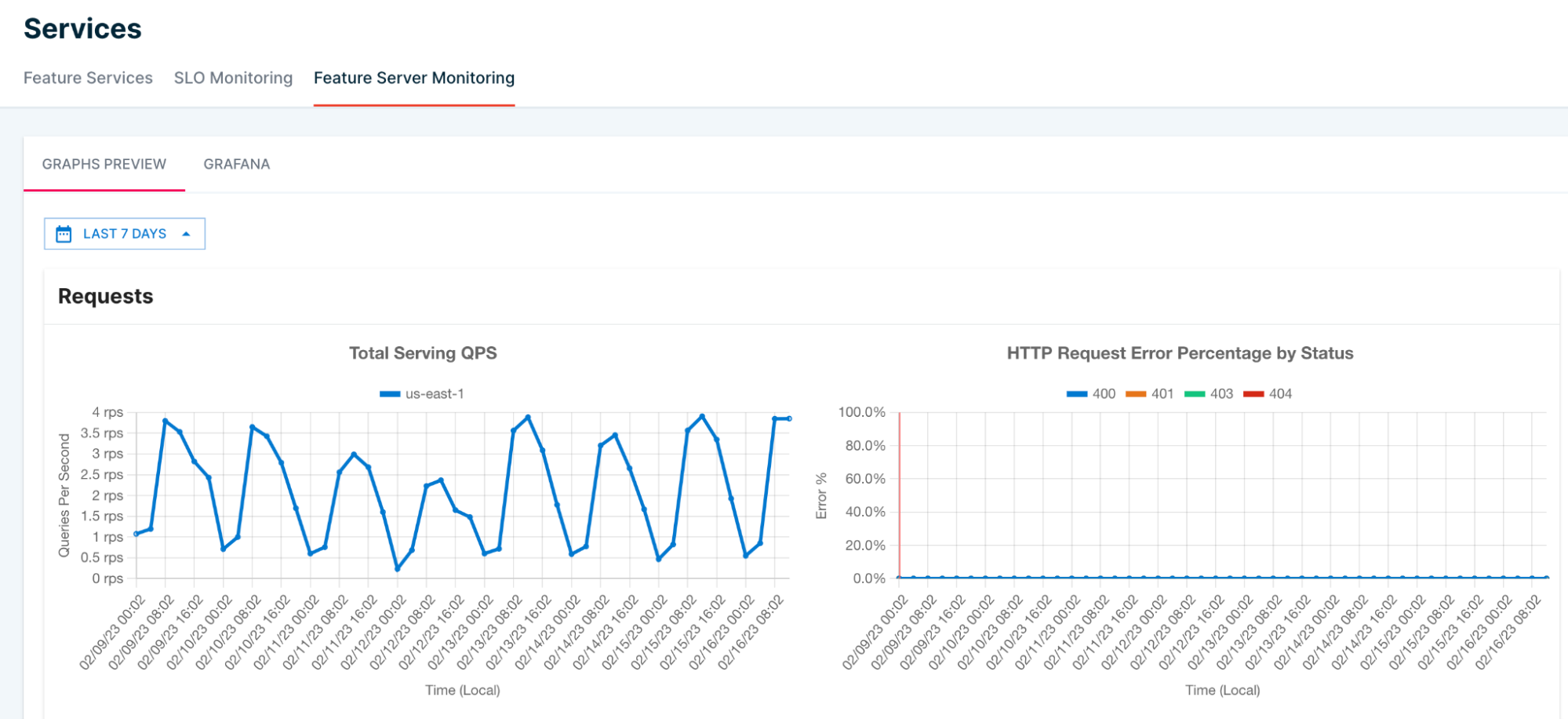

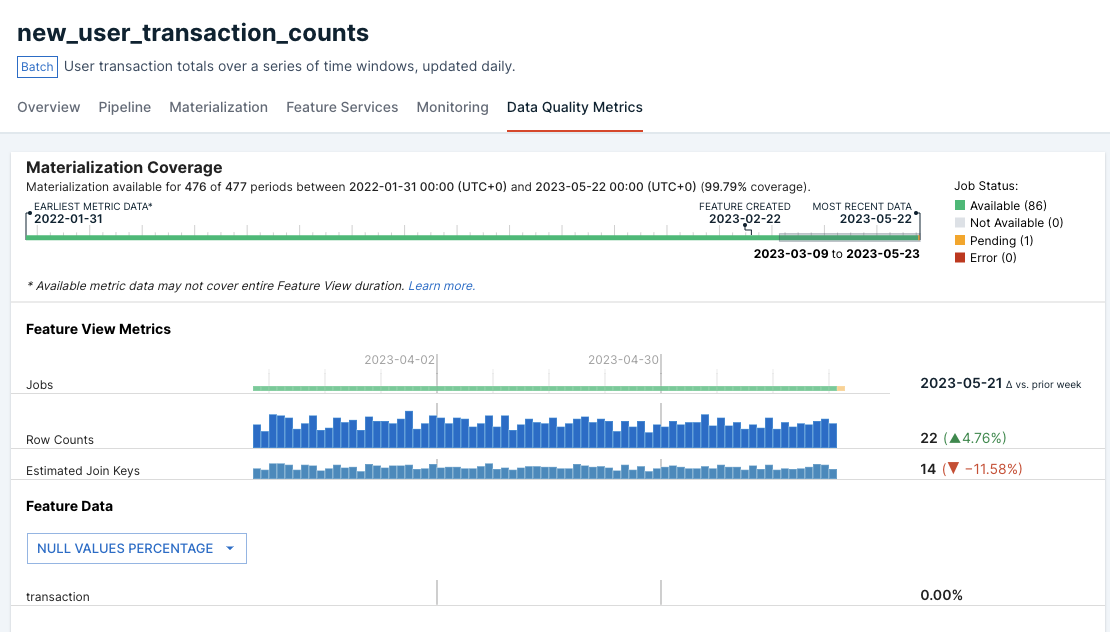

If an ML model isn’t performing as well as expected, problems with the underlying feature data are often to blame. A good feature platform can help detect and prevent these issues by delivering an end-to-end feature pipeline with built-in alerting and monitoring dashboards.

When millions of dollars are at stake, monitoring for performance metrics is highly important. For instance, Tecton’s fully managed feature platform has an SLA for serving features with low latency. You can easily monitor feature performance in production and training environments via dashboards that show key observability metrics.

When organizations begin their ML journey, they will commonly use open source solutions like Feast or attempt to build homegrown systems. This results in bespoke architectures that are purpose-fit to solve the business need of the moment but may not extend to future use cases—which usually leads to tech debt along with high infrastructure and FTE costs. The ideal feature platform helps ensure that there is a standard process from the beginning that your organization can leverage across all ML models.

4. Manage data collection and data use responsibly

Organizations should confirm that data they are leveraging for their AI/ML systems is high quality, robust, and appropriate for use when collected.

Feature platforms act as the ML data layer on top of organizations’ cloud data stores like Snowflake, Databricks, EMR, or BigQuery. Some of these systems have the ability to handle batch or, in some cases, streaming data sources. Feature platforms plug in seamlessly to these data stores, manage your feature transformations into production-ready features, and unlock real-time data sources to serve features with low latency.

Once you have all of the data sources ready to go for your model, the next step is ensuring they are high quality. Feature platforms are able to help you visualize the different metrics regarding the quality of the data you are surfacing.

5. Design and deploy secure AI systems

Per the roadmap, companies should analyze their systems’ impact on security and implement safeguards from the start. In addition, AI should be integrated into existing governance structures for risk management, data, and compliance.

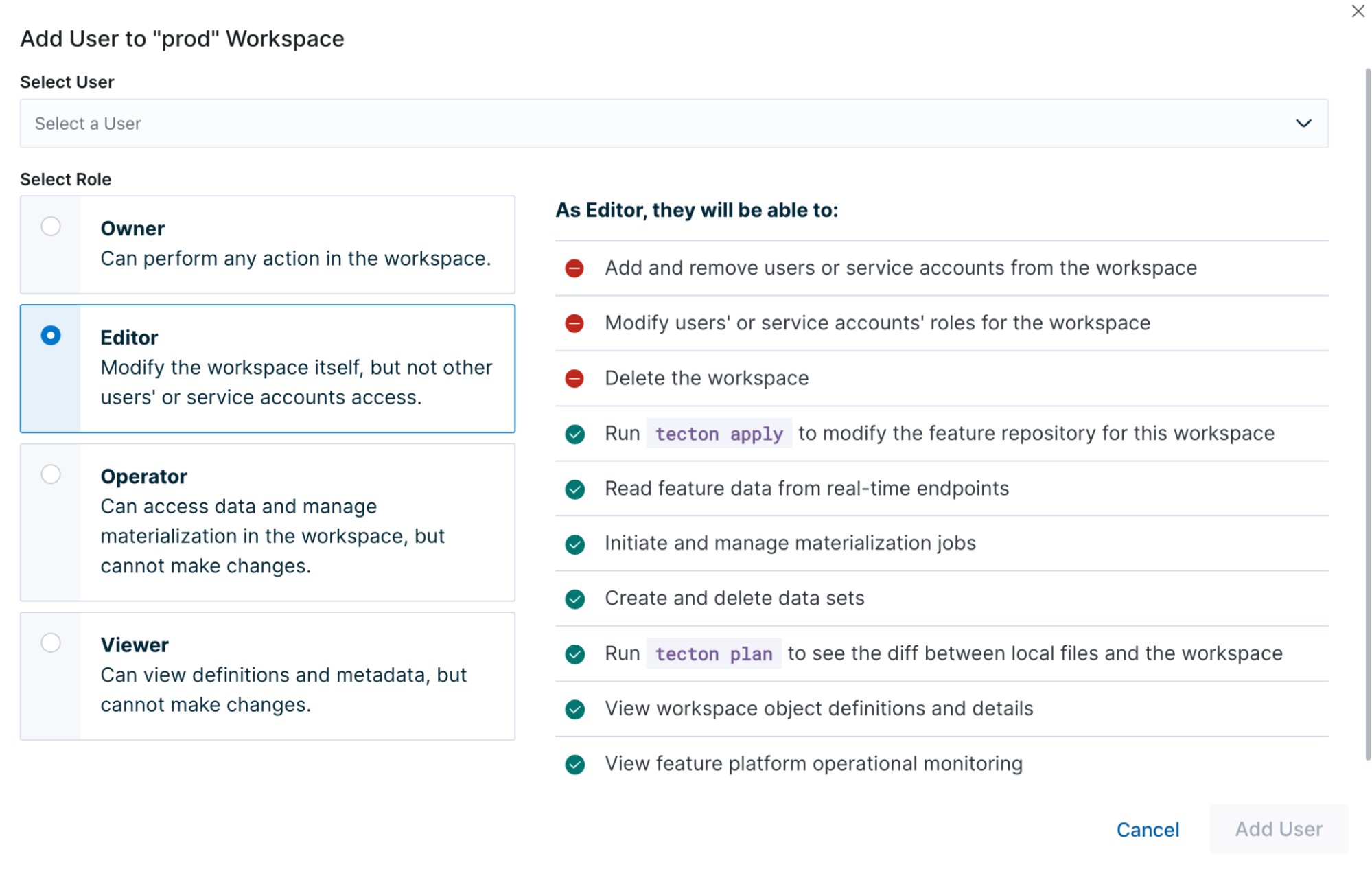

At a minimum, managed systems should have ISO 27001 and SOC 2 Type 2 certifications. Data access and protection, as well as principles like Zero Trust, must be top priorities. The system should support best practices like access control lists (ACLs), auditing, and not storing any data at rest. Unsurprisingly, managing all of these moving pieces can be extremely challenging for bespoke architectures, homegrown systems, or open source solutions.

In contrast, a good feature platform should be built with a security-first mindset and include the necessary controls for designing and deploying secure AI systems. Below is a screenshot showing how ACL in Tecton works.

In closing, a feature platform help address many of the challenges that businesses face when implementing AI responsibly. Responsible AI is only going to become more important in the coming years—in May 2023, the U.S. federal government announced new actions to promote responsible AI innovation1. Surveys show that customers will pay more for AI-powered products that are built responsibly2, and the majority of implementers say that responsible AI has improved their products and services3.

Managing the feature data that feeds into models is a key aspect of responsible AI. Homegrown systems and open source platforms can help get an AI use case off the ground in the short term, but they tend to drive long-term pains in an organization’s journey to responsible AI and unlocking consumer-facing ML applications.

If you’re interested in discussing a feature platform like Tecton, and how it can help with different elements of responsible AI, please reach out and schedule a personalized Tecton demo.

3 https://sloanreview.mit.edu/article/rai-enables-the-kind-of-innovation-that-matters/