Introducing Tecton 0.8: Seamless Machine Learning Feature Development With Unparalleled Performance & Cost

We’re thrilled to announce the launch of Tecton 0.8, which marks a big step forward in our mission to accelerate real-time AI for every organization.

Tecton 0.8 introduces improvements in performance and infrastructure costs (by up to 100x), ways to develop new types of ML features, a streamlined development experience, and much more.

10-100x lower feature platform cost & serving latency

Backfilling historical feature data can often be prohibitively expensive. That’s why we launched a new Bulk Load Capability for Online Store Backfills in Tecton 0.8 that results in significant cost reductions (up to 100x) when backfilling features to the online store. Data scientists no longer need to worry about backfilling large amounts of historical data, ensuring that they have all the training data they need to optimize model performance.

Tecton’s new Feature Serving Cache also lowers cost (by upwards of 50%) as well as latency during online feature retrieval. This capability is especially impactful for high-scale feature retrieval use cases, such as a recommendation system that needs to compute features for millions of users and hundreds of thousands of products in real time. Check out our docs to learn more!

Boost ML model performance with more powerful ML features

In 0.8, we made On-Demand Feature Views more flexible and added powerful new capabilities to our Aggregation Engine.

Custom Environments for On-Demand Feature Views (available in Private Preview) enable features that rely on Python dependencies (including any pip package!) by letting you define your own custom Python environment via a requirements.txt file. Now, you can leverage Python libraries like spacy, nltk, and fuzzywuzzy or even bring your own embeddings models to Tecton.

Secondary Key Aggregations enable aggregating not only over a Feature View’s entity join key(s) but also over a specified secondary key. This capability is commonly needed to build recommendation systems.

For example, consider a dataset of users and products. If product_id is set as a secondary key, Tecton will automatically compute and retrieve feature values not just for each user, but for each product that a user has interacted with. At feature request time, you now need to provide only a user_id and you’ll get the features for all associated products!

Offset Windows let users shift the time window for which aggregated features are retrieved by some fixed interval instead of retrieving them based on the current time. This helps data scientists build features that are particularly relevant for fraud detection applications.

For example, setting TimeWindow(window_size=timedelta(days=7), offset=timedelta(days=-3)) will aggregate values over -10 days to -3 days instead of over the past 7 days. This is especially useful when evaluating how feature values change over time (e.g., comparing values from the past day to values from all previous days in the week).

Seamlessly integrate your feature platform and data warehouse

Tecton 0.8 introduces the ability to publish features to a data warehouse (available in Private Preview). Now, your analytics and ML stacks no longer have to operate as separate silos. Instead, they can share consistent data and metrics. Tecton will automatically compute feature values and make them available in users’ data warehouse and analytics environments (e.g., Snowflake, Amazon Athena, Databricks), enabling feature analysis, exploration, selection, evaluation, and optionally, ML training.

Easier, faster & more reliable feature development experience

Feature Views can now have user-defined type schemas, ensuring clarity in organizations’ feature repositories. Configuring schemas can also speed up feature development by letting users optionally bypass server-side validation when applying features. For example, advanced users can now depend on custom JARs and pip packages in Spark-based Feature Views without needing these to be added to their validation environment. Additionally, to avoid any surprises, users are alerted during materialization if the output features do not match user-defined schemas!

Tecton’s new Repo Config file lets users set defaults for features in a Tecton feature repository, resulting in simpler and more easy-to-understand feature definitions. For example, customers can use this file to specify a default On-Demand Environment for their Feature Services.

The new tecton.login() function streamlines authentication and makes it easier than ever to work with Tecton in a notebook while ensuring that feature data remains secure.

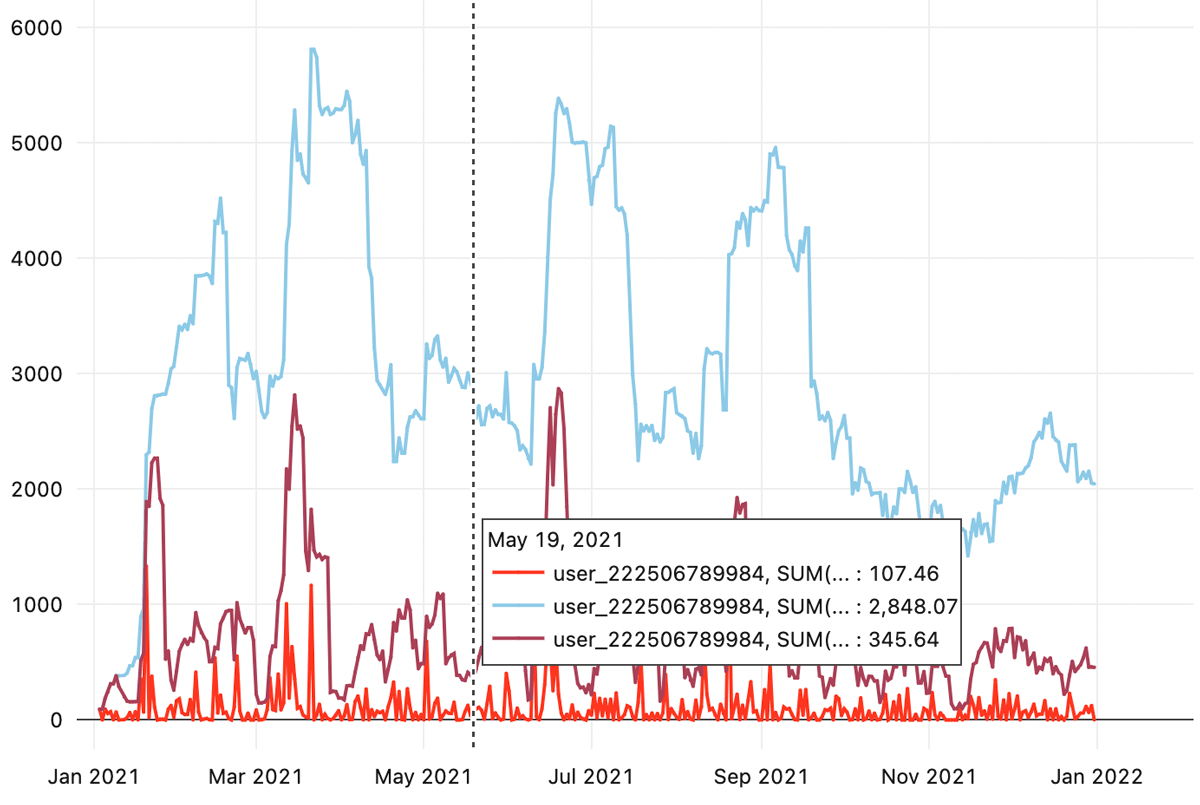

Finally, we significantly improved the usability and simplicity with new methods for offline feature retrieval and testing. These methods make it easy to generate training data from Tecton features and even track and visualize feature values over time.

Tecton 0.8, in summary

With all of these new capabilities in Tecton, it’s never been easier for ML and data teams to build and deploy powerful production real-time ML systems. You don’t need to sacrifice model performance, infrastructure cost, or user experience — with Tecton 0.8, you can have it all!

To learn more about the new capabilities in Tecton 0.8, check out our What’s New page and if you’re interested in trying Tecton out, please reach out!