Unlocking Real-Time AI for Everyone with Tecton

Introducing Rift – Tecton’s AI-optimized, Python-based compute engine for batch, streaming, and real-time features

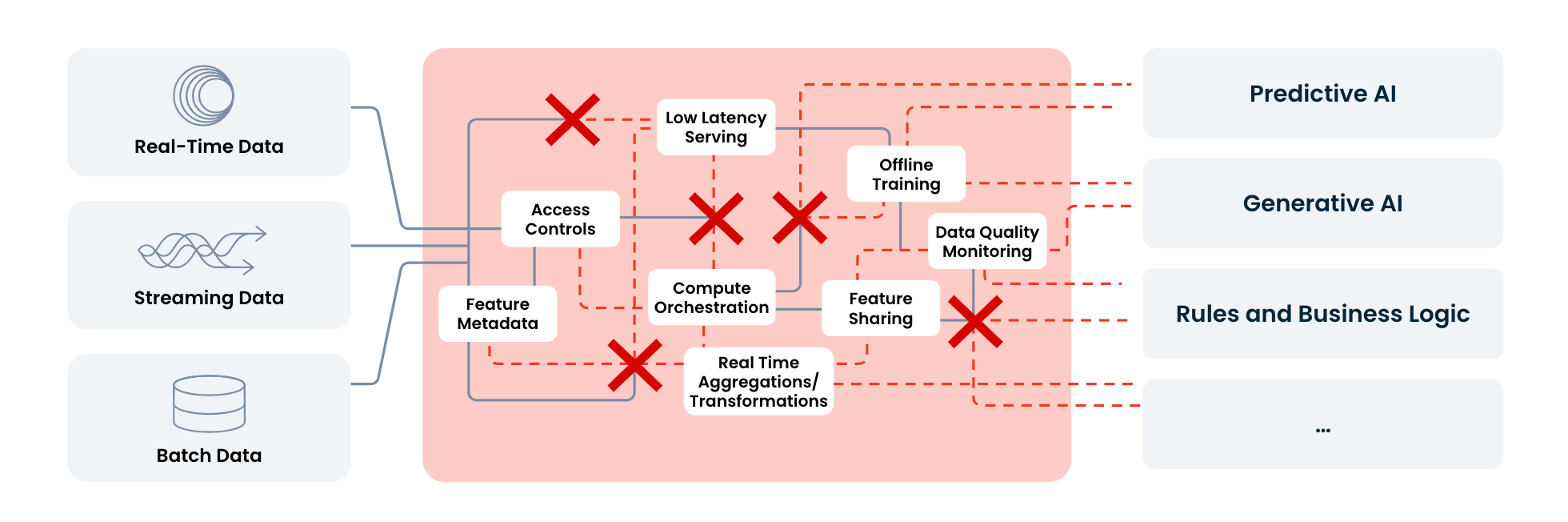

It’s no longer enough to know how your customer is doing as of yesterday. Companies need to know what is happening with a customer right now. The most sophisticated companies in tech have devoted thousands of engineers to building applications that can make automated, AI-based decisions in real time. In-app recommendations, personalized search, iron-clad fraud detection … all made possible by real-time AI. It’s these systems that have given the biggest edge to the Silicon Valley powerhouses. And while many other companies have tried to follow in their footsteps, they have struggled to achieve reliable and accurate performance at scale in production.

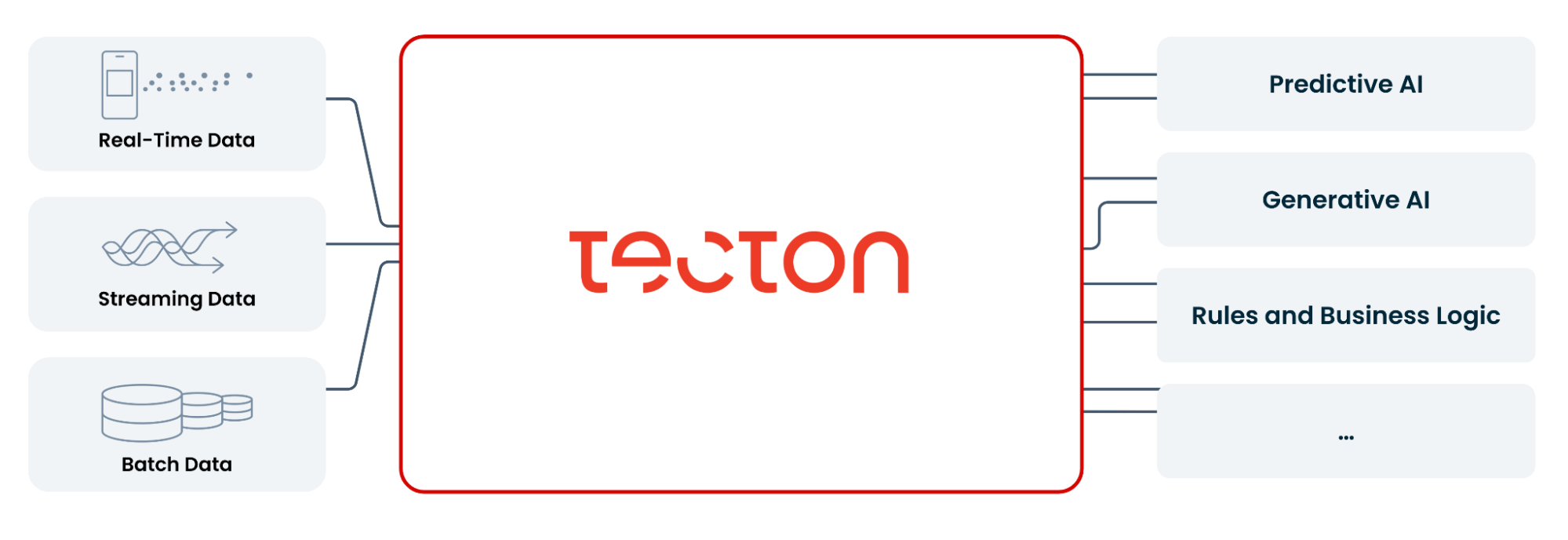

That is because real-time AI is hard. The main challenge of real-time AI is that it’s a complicated data application with unique data needs. Think low-latency feature retrieval, real-time transformations, streaming features with millisecond-level freshness, aggregations over large windows with high-cardinality data …the list goes on. Building and maintaining the data pipelines that accommodate these needs is by far the hardest part of building real-time AI applications, and most companies have not been able to crack this challenge.

For the last 5 years, the Tecton team has helped some of the most sophisticated ML teams in the world build real-time ML systems at a pace never before seen by automating the building, orchestration, and maintenance of the data pipelines for real-time AI. Our customers include companies like Roblox, Plaid, Atlassian, FanDuel, and Depop, as well Fortune 100 insurance, financial services, technology, and FinTech players. We currently power over one trillion real-time predictions per year for these customers.

Using Tecton, our customers have unlocked the potential of real-time AI by building better features across batch, streaming, and real-time data, iterating on those features at lightning speed, deploying them to production instantly, and serving them reliably at low latency and enterprise-grade scale. While powerful, these real-time AI capabilities required our customers to be proficient with complex systems like Spark.

Until today.

Introducing Rift, Tecton’s AI-optimized, Python-based compute engine

Using Tecton with Rift, it’s never been easier and faster for data teams to infuse the power of real-time AI decisioning into their customer-facing applications. Tecton with Rift is the only data platform in the market that combines the ease of use and flexibility of Python with our proven capabilities to handle the complexities of real-time AI at the reliability and scale requirements of the enterprise.

What is Rift?

Rift is Tecton’s proprietary compute engine that is optimized for AI data workflows. It is:

- Python-based: Runs Python transformations at scale in batch, streaming, or real-time

- Consistent: Processes data consistently online and offline from a single definition

- Performance-optimized: Millisecond-fresh aggregations across millions of events with no complex infrastructure

- Developer-friendly: Develop, test and run locally, and productionize instantly

- Standalone: Requires no underlying data platform or Spark. No infrastructure needed.

Rift also integrates natively with data warehouses, allowing users to define native SQL transformations that get pushed down into the warehouse. With Tecton, all transformed features can then be made available in the warehouse for analytics applications.

Using Tecton with Rift, you can:

- Build better features: Use Python to build features across batch, streaming, and real-time data

- Iterate faster: Interactively develop features in a local environment and deploy that same code to production

- Deploy instantly: Move to production with a simple `tecton apply` command, without worrying about rewriting code, infrastructure management, or pipeline orchestration

- Run with enterprise reliability and scale: Reliably power your AI models with real-time features at low latency and massive scale

Under the hood, Tecton centrally manages all features as code, productionizes the features with GitOps best practices, and builds and manages the physical data pipeline required to transform, materialize, store, serve, and monitor the features, all at enterprise-grade reliability and scale.

Use Tecton with your language and compute of choice

With Tecton, you can even mix and match Python, SQL, and Spark for features powering the same model. In instances where large-scale data processing is required, Tecton still natively supports features written in Spark. That means that some data teams can use Rift compute and write their features in Python or data warehouse native SQL, while others can choose to use Spark—and they can still collaborate in Tecton and contribute features towards the same model!

And no matter if you’re a Python, Spark, or SQL fan, the outcomes are always the same: Tecton allows your data teams to build better features, iterate faster, deploy features instantly, and run them at enterprise reliability and scale, all while controlling associated costs.

Interested in checking it out? Sign up here for our Rift private preview!